As a Product Manager, especially if you manage digital products, you typically have a wealth of data at your fingertips about how customers use your product. However, you shouldn’t let your product decisions be driven by data alone.

Jens-Fabian Goetzmann, Head of Product at RevenueCat, explains the dangers of Goodhart’s law, why you can’t A/B test your way to greatness, and why being data-driven can slow down decision-making in your organization.

You can see his original blog below and listen to the webinar he did for Product Focus by clicking the button.

Listen to the Webinar recording

A wealth of data available

Before the advent of SaaS and ubiquitous internet access, software companies had very little information about how end users used their products. They had to painstakingly conduct research to understand their customer’s behavior. Due to the long lead times to ship software in physical form, they could not simply make changes and see how their customers reacted.

Today’s world of product development couldn’t be more different. It’s easy to measure everything in your product – user behavior, feature adoption, conversion rates. With this comes the ability to accurately track how changes to the product impact all of these metrics, not just anecdotally but with cold, hard data.

Making product decisions based on data has much appeal. Tastes and convictions can differ, and nothing settles an argument better than simply trying different approaches and measuring the results. And while product management is always both an art and a science, making product decisions based on data tilts the balance toward the “science” side, appealing to many STEM-educated people involved in product development.

The ultimate form of this is the “data-driven” product manager, who bases product decisions on data as much as possible, and will try to set up quantifiable criteria for all product development. Improvement opportunities are identified based on where the metrics lag behind benchmarks. Changes to the product are evaluated based on how much they could move key performance indicators (KPIs) previously defined.

There are many good aspects of this approach. Instrumenting the product, collecting quantitative evidence, and setting up success criteria before launching product changes are all practices that I would highly recommend. However, going entirely “data-driven” is a dead end, and here’s why.

The perils of Goodhart’s Law

One of the fundamental flaws of the data-driven approach is that it relies on the unshaken belief in KPIs. After rigorous data analysis, you define good metrics that reflect the critical drivers of your product’s customer and business value. Then you tune your product development engine to keep improving those KPIs. Sounds great, doesn’t it?

“Not so fast,” calls out Charles Goodhart, wielding his law like a hammer:

When a measure becomes a target, it ceases to be a good measure.

What does this mean? It means that as soon as you make a measure a KPI and start trying to improve it, the measure is no longer useful. Let’s look at some examples. Let’s say your measure is daily active users, often used as a KPI because it is a proxy for how many users get value from your product daily. Now, you send a bunch of push notifications, and the number goes up. Did your product improve? Hardly. Did more people get value out of the product? Debatable.

The issue here is that most measures are only proxies. These leading indicators hopefully correlate to some lagging indicators we are interested in (like retention, some monetization event, or customer lifetime value).

It gets worse than that, however. Even if we take measures that aren’t proxies, Goodhart’s Law still applies. Let’s use revenue – not a proxy, just money flowing to the company and a prerequisite for a thriving business. However, I can tell you a surefire way to grow revenue: sell dollar bills for ninety cents. Of course, no company is doing precisely that. Still, many do something similar enough: spend more to acquire customers than they are getting back in customer lifetime value. If you spend a dollar on Facebook ads to acquire a customer with a lifetime value of ninety cents, that is equivalent to the dollar bill example. “Sure, we’re losing ten cents on every sale, but we’ll make it up in volume” is an age-old joke that doesn’t lose its relevance. (For prominent real-life examples, look at ride-hailing companies. With billions of VC money poured into growth, it’s still unclear whether there is a sustainable business model.)

Let’s make profits the KPI, then. Surely that must be it. Profits don’t lie. If I increase profits, then I must be doing something right. Sadly, no. Firing your whole engineering team will undoubtedly increase profits in the short term, but does anyone believe you can build a sustainable business that way?

In the end, taking into account Goodhart’s Law, you need to be careful and qualitatively reason whether the changes you are observing are real, sustainable changes or whether the connection between the KPI and the long-term success driver of the business has been lost.

Baby steps to climb a hill

Another issue with the data-driven approach is that it favors incremental improvements. It is far easier to find minor tweaks that have a small positive impact on the KPI than to find significant changes with big positive results. The reason is twofold: firstly, you can test small changes much more quickly. Google’s infamous “42 shades of blue” test is a good example. Minimum effort is required. Test different colors until you find one that slightly improves the conversion rate. Success! Donuts all around, we shipped a winning feature.

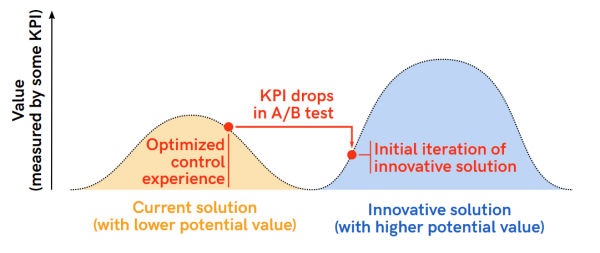

Secondly, these small incremental wins often lead to the current solution approaching a local maximum. You have optimized the heck out of the current solution, which runs like a well-oiled machine. If you now test a different solution, a more radical change, against it, it won’t be as optimized. If you only analyze how it performs, as measured by cold, hard data, it often won’t beat the optimized control experience – but that might be because it’s not yet living up to its potential.

Graph showing value v current solution v innovative solution

You can try teaching your product managers and product teams that failure is part of the job and that they should shoot for the moon and perhaps land among the stars. The truth is, though, that everyone likes to be successful. It’s natural to prefer running five small tests out of which one or two were winners to running one big test which failed.

Moreover, because of the local maximum problem, if you are strictly data-driven, you never know if you landed on a lower hill or just on the foot of a bigger hill. The data won’t tell you that, which leads directly to the next point.

Solving the hard problems

Great products aren’t built by starting with a KPI and then trying to find ways to improve it. The best products are built by focusing on a problem, banging your head against the wall, and trying to solve it. Being data-driven can lead to prioritizing thinking opportunistically rather than strategically.

There are, of course, good reasons to act opportunistically. If you might unlock a big new sale or partnership by doing something that is ever so slightly adjacent to what you are doing currently, it might be wise to leap on that opportunity. However, the big question here should be whether that furthers the company’s strategic goals, not whether it drives up some KPI or another. Otherwise, it becomes a goose chase – an unfocused effort to do whatever it takes to move KPIs rather than being good at solving the core problem that the product should be solving.

I’ve written a longer piece about this: You Can’t A/B Test Your Way to Greatness.

Perfect is the enemy of done

The last major issue with being data-driven is that it can slow down the organization. If decisions aren’t made until sufficient data is available, they will be made later than they should be. It is a bit counter-intuitive at first – after all, product analytics data is usually available in real-time, whereas gathering more qualitative feedback from customers requires time to collect.

However, you can collect qualitative feedback much earlier in the development lifecycle than you can measure impact quantitatively. You can present a low-fidelity prototype to a handful of users before a line of code has been written. An A/B test, on the other hand, requires a reasonably functional implementation.

Data-driven product managers will scoff at the sample size of just doing a few prototype testing sessions. However, you will see the findings converge after five to ten interviews. Sometimes, only a single conversation with a customer can uncover a flawed assumption underlying the current approach.

Do A/B testing and other quantitative validation approaches yield more “accurate,” reliable results? Perhaps. But in a world where speed to market and pace of continuous innovation often determine who emerges the winner, and where the next hungry startup is always waiting to disrupt any incumbent who gets too complacent, you don’t have the luxury of waiting for the perfect information.

The solution: Be data-informed, not data-driven

It’s not that relying on data to make product decisions is inherently bad. It can be helpful, as stated at the top of this article. Accurate knowledge of how your product is being used is valuable, so don’t disregard that. However, don’t let the data drive you. Become data-informed, instead. Instrument your product, and use that data to improve areas that don’t work as well as others. Use A/B testing if you are trying to optimize something. If you are trying to find the best-performing copy on your paywall, an A/B test is often the fastest and most reliable way to get results. Also, do define success measures for product changes and go back after the fact to validate that the changes did what you hypothesized.

On the other hand, also balance that quantitative perspective with a qualitative one. Ground your product decisions in mission and strategy, not just how to achieve quantitative goals. Talk to customers. Test low-fidelity prototypes. And above all, question whether data and intuition (“product sense”) tell you the same thing. If something should move metrics and it doesn’t, or it shouldn’t move metrics, and it does, ask yourself “why.” Better yet, don’t just ask yourself; use user research to find out why. The answers to these questions will tell you more about your customers and your product than only looking at numbers ever would.

You can see more of Jens blogs at www.jefago.com

Join the conversation - 1 reply

Hi Jens,

I am product manager and I liked your article. It makes sense especially since leadership always tries to quantify the success of products based on data-driven philosophy.

Thanks

Shivaji

VP – Bank of America.